Difference between revisions of "Optimization problem"

| Line 62: | Line 62: | ||

This function assumes summation of values ''g<sub>s</sub>''(''C'',''K'') in the nodes of grid defined by an ODE solver to find a numerical | This function assumes summation of values ''g<sub>s</sub>''(''C'',''K'') in the nodes of grid defined by an ODE solver to find a numerical | ||

| − | solution of the system '''(*)'''. In the particular case, experimental data could be represented by steady state values of species concentrations. Then functions ϕ and ψ have the simpler forms: | + | solution of the system '''(*)'''. |

| + | |||

| + | In the particular case, experimental data could be represented by steady state values of species concentrations. Then functions ϕ and ψ have the simpler forms: | ||

[[File:optimization_formula_14.png]] | [[File:optimization_formula_14.png]] | ||

| + | |||

| + | where ''C<sub>i</sub><sup>exp_ss</sup>'' and ''C<sub>i</sub><sup>ss</sup>'', ''i'' = 1,…,''l'', denote experimental and simulated steady state values. | ||

| + | |||

| + | Typically, researchers want to perform evaluation of model parameters using experimental data obtained with different | ||

| + | experimental conditions, i.e. different initial concentrations ''C''<sup>01</sup>,…,''C''<sup>0''k''</sup> of species. In such case, we will consider the functions | ||

| + | |||

| + | [[File:optimization_formula_15.png]] | ||

==References== | ==References== | ||

Revision as of 17:40, 12 March 2019

The general nonlinear optimization problem [1] can be formulated as follows: find a minimum of the objective function ϕ(x), where x lies in the intersection of the N-dimensional search space

and the admissible region ℱ ⊆ ℝN defined by a set of equality and/or inequality constraints on x. Since the equality gs(x) = 0 can be replaced by two inequalities gs(x) ≤ 0 and –gs(x) ≤ 0, the admissible region can be defined without loss of generality as

In order to get solution situated inside ℱ, we minimize the penalty function

The problem could be solved by different optimization methods. We implemented the following of them in the BioUML software:

- stochastic ranking evolution strategy (SRES) [1];

- cellular genetic algorithm MOCell [2];

- particle swarm optimization (PSO) [3];

- deterministic method of global optimization glbSolve [4];

- adaptive simulated annealing (ASA) [5].

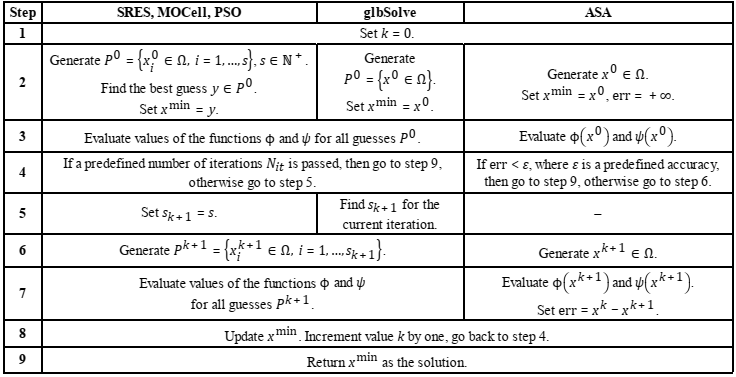

The below table shows the generic scheme of the optimization process for these methods. SRES, MOCell, PSO and glbSolve run a predefined number of iterations Nit considering a sequence of sets (populations) Pi, i = 0,…,Nit − 1, of potential solutions (guesses). In the case of the first three methods, the size s ∈ ℕ+ of the population is fixed, whereas in glbSolve the initial population P0 consists of one guess, while the size sk+ 1 of the population Pk+1 is found during the iteration with the number k = 0,…, Nit − 1. The method ASA considers sequentially generated guesses xk ∈ Ω, k ∈ ℕ+, and stops if distance between xk and xk+1 defined as Euclidean norm

becomes less than a predefined accuracy ε.

All methods, excepting glbSolve, are stochastic and seek global minimum of the function ϕ taking into account the admissible region ℱ. Thus, a guess x ∈ Ω is more preferable than a guess y ∈ Ω at some iteration of methods, if ψ(x) = 0 and ψ(y) ≠ 0 or ψ(x) < ψ(y). The method glbSolve is suited to solve only the problems with Ω ⊆ ℱ. Values of the function ψ are calculated but do not affect on the generation of potential solutions.

Application of non-linear optimization to systems biology

We assume that a mathematical model of some biological process consists of a set of chemical species S = {S1,…,Sm} associated with variables C(t) = (C1(t),…,Cm(t)) representing their concentrations, and a set of biochemical reactions ℛ = {R1,…,Rn} with rates v(t) = (v1(t),…,vn(t)) depending on a set of kinetic constants K. Reaction rates are modeled by standard laws of chemical kinetics. A Cauchy problem for ordinary differential equations representing a linear combination of reaction rates is used to describe the model behavior over time:

Here N is a stoichiometric matrix of n by m. We say that Css is a steady state of the system (*) if

Identification of parameters K and initial concentrations C0 is based on experimental data represented by a set of points Ciexp(ti,j) defining dynamics of variables C1(t),…,Cl(t), l ≤ m, at given times ti,j, j = 1,…,ri, where ri is the number of such points for the concentration Ci(t), i = 1,…,l. The problem of parameter identification consists in minimization of the function of deviations defined as the normalized sum of squares [6]:

where normalization factors ωmin/ωi with ωmin = miniωi are used to make all concentration trajectories have similar importance. The weights ωi are calculated by one of the formulas on experimentally measured concentrations:

![]() (mean square value),

(mean square value), ![]() (mean value),

(mean value), ![]() (standard deviation).

(standard deviation).

If we want to consider additional constrains

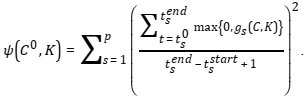

holding for concentrations C(t) and parameters K for some period of time ![]() , the penalty function is defined as

, the penalty function is defined as

This function assumes summation of values gs(C,K) in the nodes of grid defined by an ODE solver to find a numerical solution of the system (*).

In the particular case, experimental data could be represented by steady state values of species concentrations. Then functions ϕ and ψ have the simpler forms:

where Ciexp_ss and Ciss, i = 1,…,l, denote experimental and simulated steady state values.

Typically, researchers want to perform evaluation of model parameters using experimental data obtained with different experimental conditions, i.e. different initial concentrations C01,…,C0k of species. In such case, we will consider the functions

References

- Runarsson T.P., Yao X. Stochastic ranking for constrained evolutionary optimization. IEEE Transactions on Evolutionary Computation. 2000. 4(3):284–294.

- Nebro A.J., Durillo J.J., Luna F., Dorronsoro B., Alba E. MOCell: A cellular genetic algorithm for multiobjective optimization. International Journal of Intelligent Systems. 2009. 24(7):726–746.

- Sierra M.R., Coello C.A. Improving PSO-Based Multi-objective Optimization Using Crowding, Mutation and ∈-Dominance. Evolutionary Multi-Criterion Optimization. Lecture Notes in Computer Scienc. 2005. 3410:505-519.

- Björkman M., Holmström K. Global Optimization Using the DIRECT Algorithm in Matlab. Advanced Modeling and Optimization. 1999. 1(2):17–37.

- Ingber L. Adaptive simulated annealing (ASA): Lessons learned. Control and Cybernetics. 1996. 25(1):33–54.

- Hoops S., Sahle S., Gauges R., Lee C., Pahle J., Simus N., Singhal M., Xu L., Mendes P., Kummer U. COPASI-a COmplex PAthway SImulator. Bioinformatics. 2006. 22(24):3067–3074.